The artificial intelligence (AI) pioneer Geoffrey Hinton recently resigned from Google, warning of the dangers of the technology “becoming more intelligent than us”. His fear is that AI will one day succeed in “manipulating people to do what it wants”.

There are reasons we should be concerned about AI. But we frequently treat or talk about AIs as if they are human. Stopping this and realising what they actually are could help us maintain a fruitful relationship with the technology.

In a recent essay, the US psychologist Gary Marcus advised us to stop treating AI models like people. By AI models, he means large language models (LLMs) like ChatGPT and Bard, which are now being used by millions of people on a daily basis.

He cites egregious examples of people “over-attributing” human-like cognitive capabilities to AI that have had a range of consequences. The most amusing was the US senator who claimed that ChatGPT “taught itself chemistry”. The most harrowing was the report of a young Belgian man who was said to have taken his own life after prolonged conversations with an AI chatbot.

Marcus is correct to say we should stop treating AI like people – conscious moral agents with interests, hopes and desires. However, many will find this difficult to near-impossible. This is because LLMs are designed – by people – to interact with us as though they are human. And we’re designed – by biological evolution – to interact with them likewise.

The reason LLMs can mimic human conversation so convincingly stems from a profound insight by computing pioneer Alan Turing, who realised that it is not necessary for a computer to understand an algorithm in order to run it. This means that while ChatGPT can produce paragraphs filled with emotive language, it doesn’t understand any word in any sentence it generates.

The LLM designers successfully turned the problem of semantics – the arrangement of words to create meaning – into statistics, matching words based on their frequency of prior use. Turing’s insight echoes Darwin’s theory of evolution, which explains how species adapt to their surroundings, becoming ever-more complex, without needing to understand a thing about their environment or themselves.

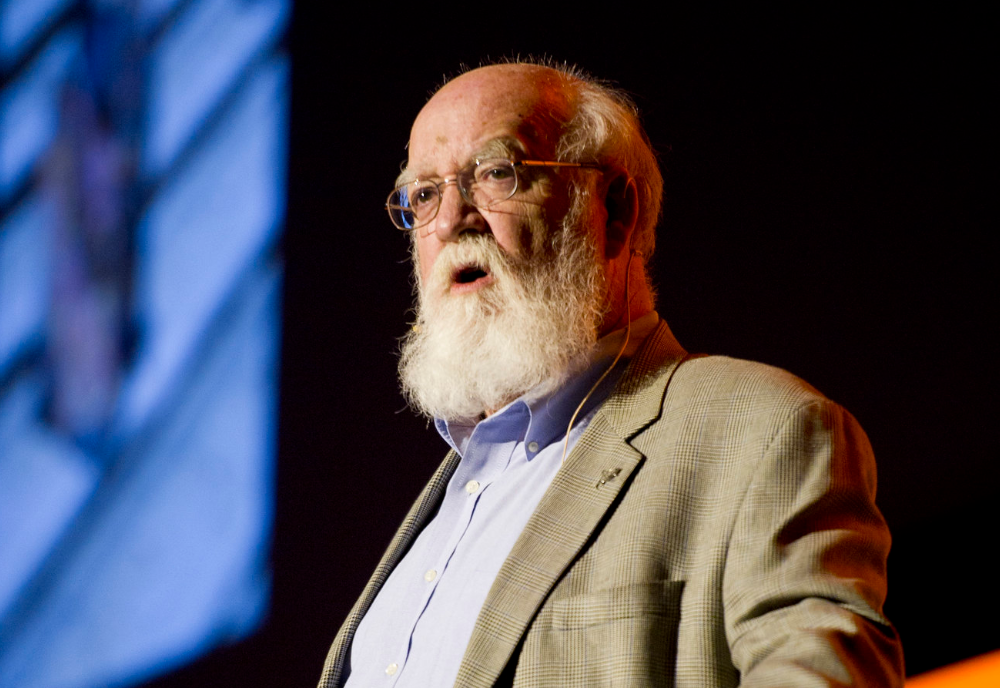

The cognitive scientist and philosopher Daniel Dennett coined the phrase “competence without comprehension”, which perfectly captures the insights of Darwin and Turing.

Another important contribution of Dennett’s is his “intentional stance”. This essentially states that in order to fully explain the behaviour of an object (human or non-human) we must treat it like a rational agent. This most often manifests in our tendency to anthropomorphise non-human species and other non-living entities.

But it is useful. For example, if we want to beat a computer at chess, the best strategy is to treat it as a rational agent that ‘wants’ to beat us. We can explain that the reason the computer castled, for instance, was because “it wanted to protect its king from our attack”, without any contradiction in terms.

We may speak of a tree in a forest as ‘wanting to grow’ towards the light. But neither the tree, nor the chess computer represents those ‘wants’ or reasons to themselves; only that the best way to explain their behaviour is by treating them as though they did.

Our evolutionary history has furnished us with mechanisms that predispose us to find intentions and agency everywhere. In prehistory, these mechanisms helped our ancestors avoid predators and develop altruism towards their nearest kin. These mechanisms are the same ones that cause us to see faces in clouds and anthropomorphise inanimate objects. No harm comes to us when we mistake a tree for a bear, but plenty does the other way around.

Our evolutionary history has furnished us with mechanisms that predispose us to find intentions and agency everywhere.

Evolutionary psychology shows us how we are always trying to interpret any object that might be human as a human. We unconsciously adopt the intentional stance and attribute all our cognitive capacities and emotions to this object.

With the potential disruption that LLMs can cause, we must realise they are simply probabilistic machines with no intentions or concerns for humans. We must be extra-vigilant around our use of language when describing the human-like feats of LLMs and AI more generally. Here are two examples.

The first was a recent study that found ChatGPT is more empathetic and gave “higher quality” responses to questions from patients compared with those of doctors. Using emotive words like ’empathy’ for an AI predisposes us to grant it the capabilities of thinking, reflecting and of genuine concern for others – which it doesn’t have.

The second was when GPT-4 (the latest version of ChatGPT technology) was launched last month, capabilities of greater skills in creativity and reasoning were ascribed to it. However, we are simply seeing a scaling up of ‘competence’, but still no ‘comprehension’ (in the sense of Dennett) and definitely no intentions – just pattern matching.

In his recent comments, Hinton raised a near-term threat of ‘bad actors’ using AI for subversion. We could easily envisage an unscrupulous regime or multinational deploying an AI, trained on fake news and falsehoods, to flood public discourse with misinformation and deep fakes. Fraudsters could also use an AI to prey on vulnerable people in financial scams.

Last month, Gary Marcus and others, including Elon Musk, signed an open letter calling for an immediate pause on the further development of LLMs. Marcus has also called for a an international agency to promote safe, secure and peaceful AI technologies, dubbing it a “Cern for AI”.

Furthermore, many have suggested that anything generated by an AI should carry a watermark so that there can be no doubt about whether we are interacting with a human or a chatbot.

Regulation in AI trails innovation, as it so often does in other fields of life. There are more problems than solutions, and the gap is likely to widen before it narrows. But, in the meantime, repeating Dennett’s phrase “competence without comprehension” might be the best antidote to our innate compulsion to treat AI like humans.

This article was originally published in The Conversation.

Photo by Steve Johnson on Unsplash.

Thank you Neil for this very thought provoking essay. I agree, we should absolutely stop treating early 21st century AI models like humans beings. But don’t you suspect we are in the Ford Model T era when it comes to AI? Geoffrey Hinton wasn’t the only AI researcher leaving Google recently. Google engineer Blake Lemoine claimed that Google’s LLMs system is sentient before getting himself fired. For the longest time I’ve held firm that computers are not in the world, that human knowledge is partly tacit, and therefore cannot be articulated and incorporated in a computer program. If we agree that “sentience” implies the ability to feel or have subjective feelings (conscious even) then yes, that kind of AI is far, far beyond where we’re at. But I don’t say never. I don’t think it’s an AI problem but rather a mind-brain problem – the problem of how to address the relation between mental phenomena and neural or physical phenomena. Should it turn out that the state of mind is the same as brain processes; that mental state is the same as the physical state of the brain, then it’s just a matter of time when computers will one day be our brethren. Maybe!

No Jack, you misunderstand Neil’s brilliant article.

This article is in the tradition of Dennett and Grayling, who recognise that the only legitimate modern function for Philosophy is the clarification — using logic, semantics, and psychology — of problems arising purely from people ‘philosophising’. In this case through the misuse of terms and concepts such as ‘intelligence’, ‘intention’, ‘wants’, and ‘understanding’. He even explains (from cognitive science and evolutionary theory) why people have confused themselves by misusing such terms. Others have explained the same as instances of ‘promiscuous teleological intuition’ (Bakker, 2015; Kelemen, 2004).

Philosophy since Plato has assumed that absolutes like ‘consciousness’ and ‘intelligence’ exist in the world and we need to think hard to discover them. Scientists recognise that such concepts are human constructions, and work with operational definitions of them to discover facts about the world through systematic observation and experiment (the scientific method). You have fallen into the (unproductive) Philosophy trap by using terms such as ‘sentience’, ‘mind-brain problem’, ‘mind’, and even ‘brethren’ without operational definitions, as though they don’t need one. When one is agreed upon, the problems either become empirically solvable, or disappear completely.

Gary, I’m sorry you feel that way.

Perhaps I could have been a little clearer. No where am I contradicting or disagreeing with Neil’s fine article. You’ll find that my remark “I don’t think it’s an AI problem but rather a mind-brain problem” is an attempt to suggest that it’s the very age-long chestnut in philosophy, going back to Descartes and his cabal, that needs clarification, and definition.

I am of the view, however, perhaps a little off-subject (and possibly the source of your unease with my comment) that should we understand (scientifically) the mechanisms that reflect subjective experience emerging from a neuro-substrate, then not only will we have a better understanding of “self” in the “scientific method” but how that might contribute to future incarnations of AI in any one’s language.

I used the word “sentience” because it was what the Google engineer used before falling out of favour with Google management and I felt it relevant because Neil had referenced Google’s Geoffrey Hinton. I would of have thought my use of the word “brethren” which you seem to have particular issue with would have been seen for what it was: a tongue in cheek play on (a) being a part of humanity and (b) a reference to religiosity in an irreligious publication.

As for “in the tradition of Dennett and Grayling” I can tell you upfront I’ve stopped caring about Dennett and his musings a long time ago. It is as though he is still in 1965 and the main bad guy in his world-view is Descartes as Gilbert Ryle understood him and that doesn’t seem true. Dennett’s discussions are so vague that it could fit with any number of actual theories of consciousness, dualism, and all his takes on the philosophy of AI or should I say philosophical artificial intelligence as he puts it.